CREU 2011

American Sign Language (ASL) is the language of the Deaf in North America. It is a visual/gestural language distinct from English with its own unique grammar and language processes. People who use ASL as their preferred language have extremely poor reading skills. In fact, the average reading level of Deaf adults in the U.S. is between the third and fourth grade level and this creates barriers to employment and social services. An automatic English-to-ASL translator would make both spoken and written English more accessible to the Deaf community.

An essential part of an English-to-ASL translator is an ASL synthesizer, which creates 3D computer animations of ASL to be viewed by the user of the translation system. At present, no such synthesizer exists. Currently, our 3D sign language avatar can produce signs that involve hand and head movement, but ASL grammar also requires movement of the torso and lower body. Lower body movement appears in some types of verb agreement as well as in storyteller mode, where a signer recounts a dialog involving two or more signers. This project will create lower-body rigging that will support these syntactic processes when synthesizing ASL.

- What body stances facilitate verb agreement and storyteller mode in ASL?

Although there are linguistic texts that give a qualitative characterization of these stances, the descriptions are not specific enough to permit a geometric implementation. Determining the modeling requires acquisition and study of sources depicting the language processes. While there are several excellent video collections, many of these only show a frontal view of a signer. A frontal view lacks depth information, which is necessary for 3D animation.

Mid-year update

The team has developed a low-cost method for capturing more information about these stances. Click on the picture below to read more about it in Allison's blog:

-

What changes to the 3D computer model will be required to

replicate the body stances?

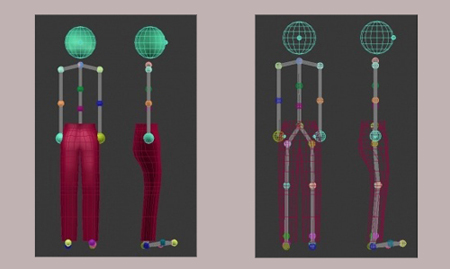

We will create a lower-body polygon model and bone rig for the current sign-language avatar. After skinning the model, students will write a program to recall the joint angle values from the database and apply them to the appropriate bones. They will use the modified avatar to make sample animations depicting the new stances in the context of complete ASL sentences.

Mid-year update

The polygon pants are created and ready for skinning! Learn more about the process in Marie's blog. Click on the picture below:

- Will the changes to the 3D computer model result in

better animations of ASL?

User tests of the animations will determine their clarity and grammatical correctness. Based on feedback, and time permitting, we hope to make improvements and produce a second set of animations.

Study conducted by: Allison Lewis and Marie Stumbo. Their blogs are:

Research supervisors: Dr. Rosalee Wolfe and Dr. John C. McDonald

The CREU project is sponsored by the Computing Research Association Committee on the Status of Women in Computing Research (CRA-W) and the Coalition to Diversify Computing (CDC). Funding for this project is provided by the National Science Foundation.