CREU 2005

Lindsay and Lesley

Creation of Non-Manual Signals

Although legibility is the foremost concern in depicting signs in ASL, a believable human model is also very important to usable software and subtly adds redundancy that enhances legibility. This year, we are in the process of developing algorithims to add realistic body motion to Paula outside of the mandatory arm, hand and wrist motions of signs. These motions, referred to as non-manual signals, include, for example, the unconscious motion of one arm while the other arm moves in a particular direction in a sign.

Essentially, non-manual signals make Paula more passable as a human being to the viewer, as opposed to her appearing as an expressionless robot. This is similar to the way simulated inflection and timbre lends credibility to computer voice synthesizers for hearing people.

A Motion Study

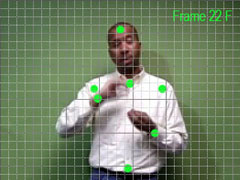

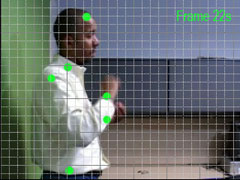

For the sake of software expandability, we determined it would be much more efficient to develop a set of rules about non-manual signals which can be applied to any sign which is in Paula's vocabulary than to add the motions individually to every sign. Since motion capture did not lend itself well to our needs, we studied a number of ASL phrases on specially prepared video, measuring the position of significant body points at regular frame intervals on an X,Y,Z coordinate plot:

With this data, we could then use statistics sofware to calculate the correlations and trends of motion between various points of the body during different signs.

Since some of the correlations that we discovered proved to be more complex and situation dependent than we initally anticipated, we have coded non-manual signals into some of Paula's phrases by hand in order to better understand certain behaviors. An example phrase with and without the addition of non-manual signals can be downloaded here in DivX format:

The second step was to develop a geometric characterization of the observed motions. This involved running correlations between the positions of the signer's wrists compared to other landmarks on the head, shoulders and spine. The position of a signer's hands is one of the most noticeable aspects of signing, and is in fact one of the phonemic classes of the language. This is was it was important to pose correlation study in terms of the wrists.

What became apparent was that there was no general correlation between wrist position and other body landmarks. However, when the wrists moved outside of a certain space in front of the signer, the correlations became quite pronounced. This space, bounded vertically and horizontally by the shoulders, was dubbed, the zone of comfort.

The establishment of the zone of comfort facilitated four generalizations. These a) fingerspelling, b) two-handed raise, c) one-handed lateral, and d) two-handed asymmetry. Each of these involves positions outside the zone of comfort.

For fingerspelling, the dominant hand rises above the zone of comfort. This requires the additional rotation of the dominant shoulder upward, and the non-dominant downward to achieve natural movement. For a two-handed raise, both hands rise above the zone of comfort which requires rotation of both shoulders upward. The one-handed signal occurs when one hand is outside the width of the zone of comfort, which produces a twist in the body. It requires rotations in the waist, spine and shoulders in the direction of the hand position. In the case of two-handed asymmetry, both hands are on one side of the midline of the body. This situation contains a similar, but more pronounced twist in the waist, spine, and shoulders. At present, these generalizations are being used to automate non-manual signals of the current animations.

Results

These generalizations have been implemented in the same animation system currently being used to generate ASL sentences. When viewing previous animations without non-manual signals, both Deaf and hearing viewers commented that the animations appeared somewhat mechanical. When reviewing our initial animations using the four generalizations, viewers were strongly positive in their comments. They remarked on a more life-like quality of the motion, as well as making it easier to understand the signing.

Conclusions and future work

Many nonfacial NMS for declaractive can be modeled by observing the path created by a signer's wrists. This is a great savings for creating computer animations depicting ASL sentences. Since the NMS can be generated procedurally, there is no need for a human animator to create them nor for a computer database to store them explicitly.

Although this approach holds great promise, there is one type of NMS that was not completely modeled by this approach. This NMS, called "facing", determines the body orentiation toward the object of the sentence. For example, if a signer fingerspells the name "Bob", and Bob is the object of the sentence, the signer's body takes a different orientation than when "Bob" is the subject of the sentence. Next steps would include an integration of ASL sentence syntax with the animation geometry to model this NMS.

Our Undergraduate Team

Our undergraduate research team consists of Lesley Carhart, a senior studying Network Technology, and Linsday Semler, a senior studying Computer Graphics. Both intend to graduate from DePaul in 2006.